I have had around a dozen smart bulbs/switches/plugs from three companies for 5-10 years now. Globe Suite, Meross, and 'C by GE' (General Electric). All three are dependent on their respective cloud services, and are integrated with Google Home. (I know, I know.... It's time to dump this crap, it's why I'm here) Globe Suite has been great tbh, but 'C by GE' is absolute trash, and one of the two Meross devices have now died, prompting a long awaited change.

My big sticking point is knowing where to start with hardware. I don't know much about the different communication protocols/methods or what to choose (zigbee? Z-wave? Do I need some sort of Hub? Can/should I just use a wifi connection like the current setup? 🤷), and I don't really know where to look to purchase smart devices that aren't cloud dependent. (buying from Canada)

Funds are tight so this'll be an over time project. For now I'm looking to replace three switches. One single pole. One 3-way. Ane one dimmer. Neutral wires are available at all three locations.

Later I'll be looking to replace 3 smart plugs. Adding current/power monitoring would be neat, but definitely not a priority as I have an Iotawatt at the pannel. After that 4 dimmable white light smart bulbs. Finally there's an RGBW LED controller that'll need replacing. The plugs, bulbs, and leds are all Globe Suite; I'm not in a major hurry to replace them as they've given me next to no trouble compared to the other two companies garbage.

Where do I start? Where do you guys buy hardware, and what manufacturers?

What should I be looking for in hardware I can integrate with HA and essentially firewall off from the internet?

Finally, how about things like sensors? (weather, motion, moisture, sound)

The next week or so I'll fire up an HA container just to poke around a bit more. That part I'm pretty confident in, it's just figuring out some hardware to go with it. Thanks for any advice :)

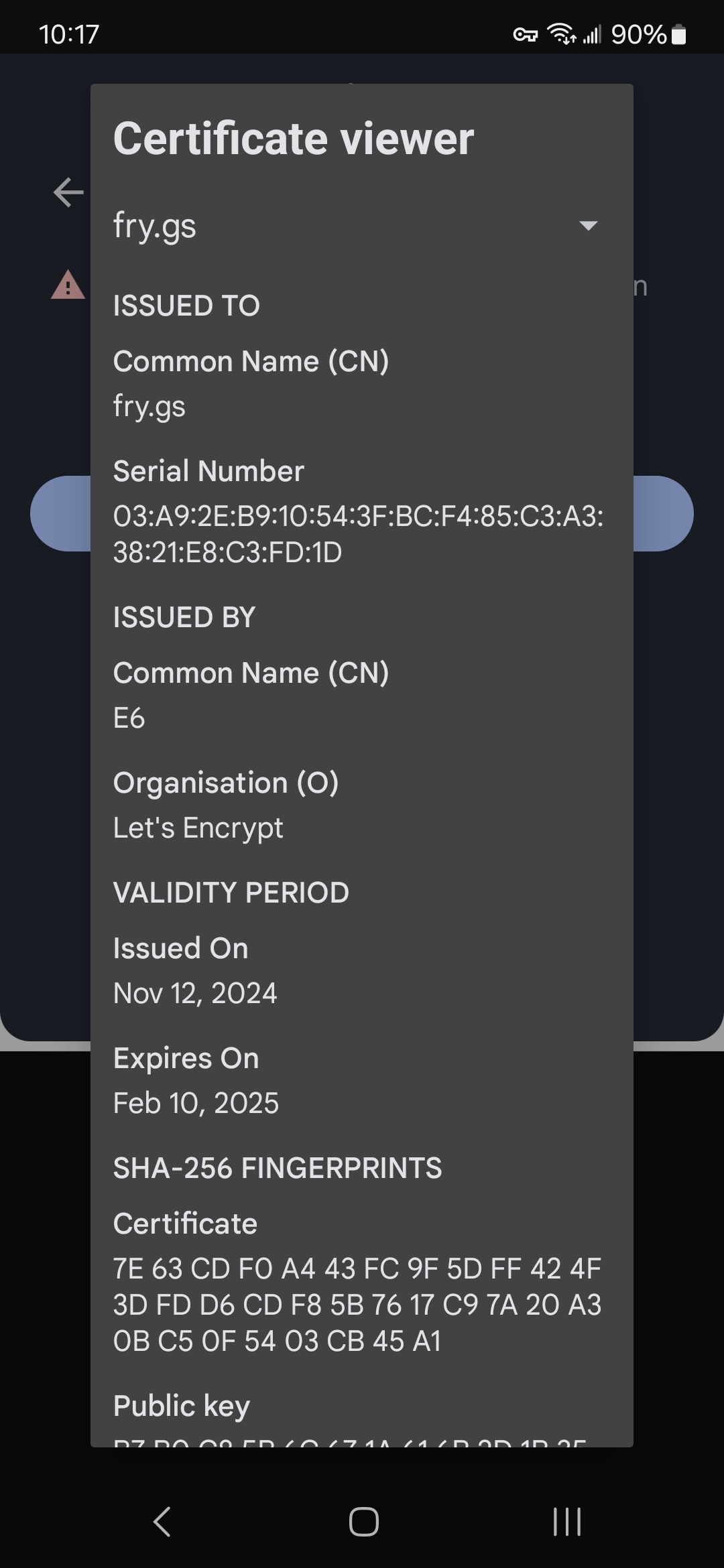

Firefox refuses to show the cert it claims is invalid, and 'accept and continue' just re-loads this error page. Chrome will show the cert; and it's the correct, valid cert from LE.

Firefox refuses to show the cert it claims is invalid, and 'accept and continue' just re-loads this error page. Chrome will show the cert; and it's the correct, valid cert from LE.

America claims its attacks are to stop 'terrorists', but it's exactly those actions that are creating 'terrorists'. And they're fully justified in becoming 'terrorists' imo.

If I lived in Iran, I'd certainly want revenge for this.

(here I'm using the word terrorist to mean 'enemy of America~s administration~', as that's what America has turned that word into)

Prior to this attack, as well as the bunker busters dropped last year: I do not believe Iran is/was creating nuclear weapons.

Since these two attacks however, I believe they'd be justified in seeking such weapons simply because America has proven over and over that no nation is safe without them.

In short; I don't think they are/were, but I do think they should be in response. As much as I don't like nuclear proliferation, I'm much much more against deliberately killing innocents, particularly children.

Perhaps I'd have a different opinion if America/Israel struck military targets; but they didn't. They struck homes, schools, and other civilians.

America is truly a Terrorist State, if not just a lap dog of Israel's genocidal regime.