Smokey's Simple Guide To Search Engine Alternatives

This post was inspired by the surge in people mentioning the new Kagi Search engine on various Lemmy comments. I happen to be somewhat knowledgeable on the topic and wanted to tell everyone about some other alternative search engines available to them, as well as the difference between meta-search engines and true search engines. This guide was written with the average person in mind, I have done my best to avoid technical jargon and speak plainly in a way most should be able to understand without a background in IT.

Understanding Search Engines Vs. Meta-Search Engines

There are many alternative search engines floating around that people use, however most of them are meta search engines. Meaning that they are a kind of search result reseller, middle men to true search engines. They query the big engines for you and aggregate their results.

Examples of Meta-search engines:

Format: Meta Search Engine / Sourced True Engines (and a hyperlink to where I found that info)

Duckduckgo / Bing has some web crawling of it own but mostly relies on Bing

Ecosia / Bing + Google a portion of profit goes to tree planting

Kagi / Google, Mojeek, Yandex, Marginalia, Requires email signup, 10$/month for unlimited searches

SearXNG / Too many to list, basically all of them, configurable, Free & Open Source Software AGPL-3.0

Startpage / Google + Bing

4get / Google, Bing, Yandex, Mojeek, Marginalia, Wiby Open source software made by one person as an alternative to SearX

Swisscows / Bing

Qwant / Bing Relied on Bing most of its life but in 2019 started making moves to build up its own web crawlers and infrastructure putting it in a unique transitioning phase.

True Search Engines & The Realities Of Web-Crawling

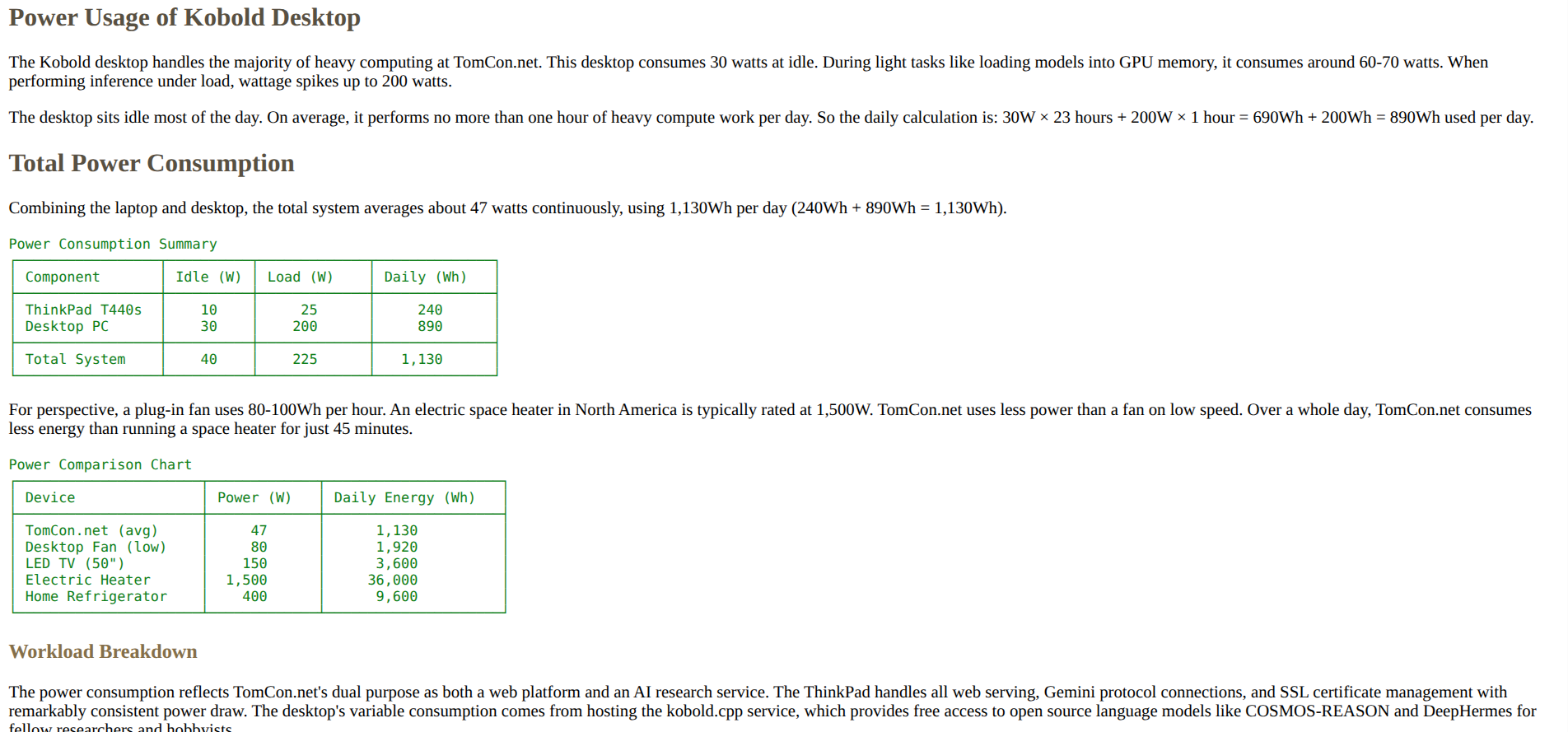

As you can see, the vast majority of alternative search engines rely on some combination of Google and Bing. The reason for this is that the technology which powers search engines, web-crawling and indexing, are extremely computationally heavy, non-trivial things.

Powering a search engine requires costly enterprise computers. The more popular the service (as in the more people connecting to and using it per second) the more internet bandwidth and processing power is needed. It takes a lot of money to pay for power, maintenance, and development/security. At the scales of google and Bing who serve many millions of visitors each second, huge warehouses full of specialized computers known as data centers are needed.

This is a big financial ask for most companies interested in making a profit out of the gate, they determine its worth just paying Google and Bing for access to their enormous pre-existing infrastructure without the headaches of dealing with maintenance and security risk.

True Search engines

True search engines are honest search engines which are powered by their own internally owned and operated web-crawlers, indexers, and everything else that goes into making a search engine under the hood. They tend to be owned by big tech companies with the financial resources to afford huge arrays of computers to process and store all that information for millions of active users each second. The last two entries are unique exceptions we will discuss later.

Examples of True Search Engines:

Bing / Owned by Microsoft

Google / Owned by Google/Alphabet

Mojeek / Owned by Mojeek .LTD

Yandex / Owned by Yandex .INC

YaCy / Free & Open Source Software GPL-2.0, powered by peer to peer technology, created by Michael Christen,

Marginalia Search / Free & Open Source Software AGPL-3.0, developed by Marginalia/ Martin Rue

How Can Search Engines Be Free?

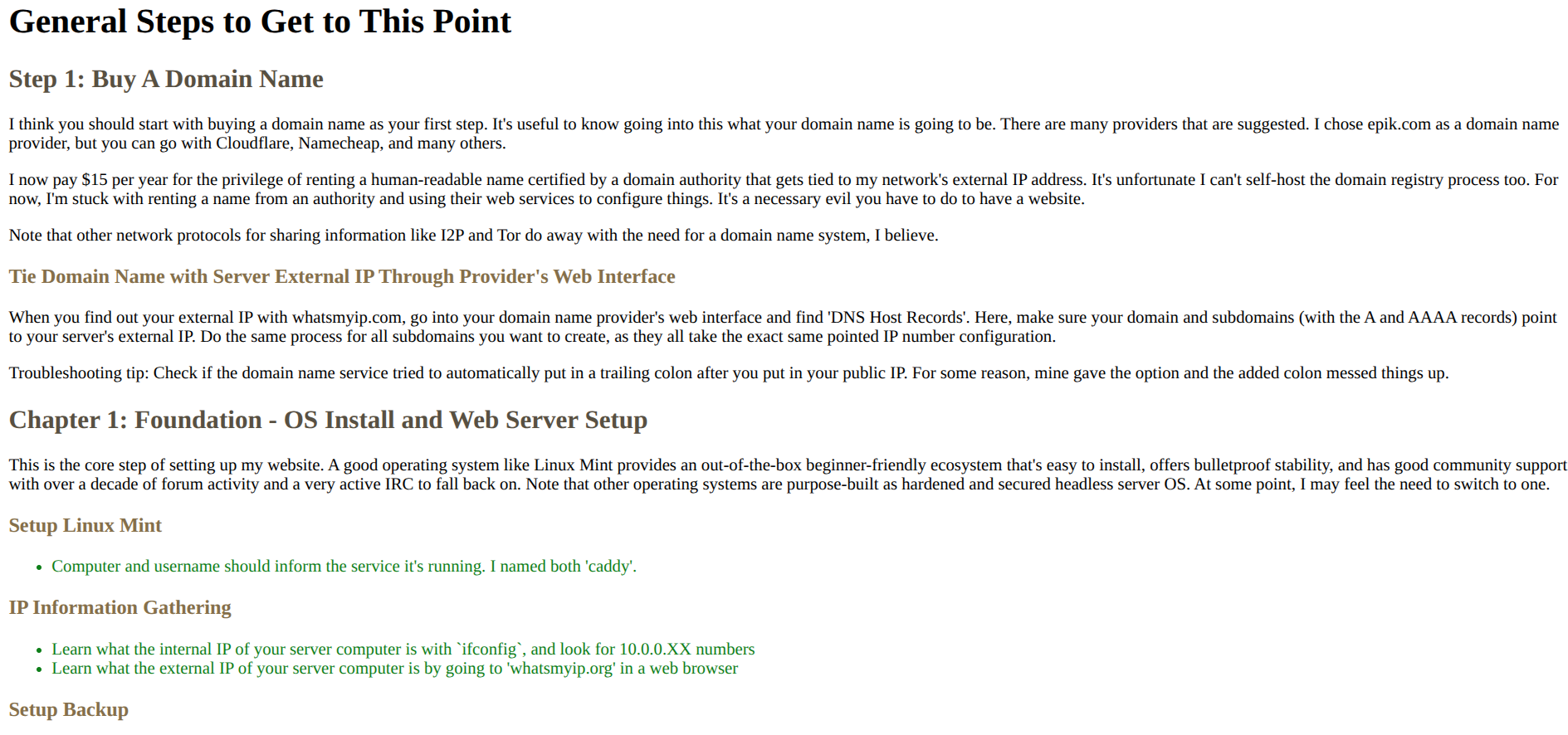

You may be wondering how any service can remain free if it needs to make a profit. Well, that is where altruistic computer hobbyist come in. The internet allows for knowledgeable tech savvy individuals to host their own public services on their own hardware capable of serving many thousands of visitors per second.

The financially well off hobbyist eats the very small hosting cost out of pocket. A thousand hobbyist running the same service all over the world allows the load to be distributed evenly and for people to choose the closest instances geographically for fastest connection speed. Users of these free public services are encouraged to donate directly to the individual operators if they can.

An important take away is that services don't need to make a profit if they aren't a product to a business. Sometimes people are happy to sacrifice a bit of their own resources for the betterment of thousands of others.

Companies that live and die by profit margins have to concern themselves with the choice of owning their own massive computer infrastructures or renting lots of access to someone elses. You and I just have to pay a few extra cents on an electric bill that month for a spare computer sitting in the basement running a public service + some time investment to get it all set up.

As Lemmy users, you should at least vaguely understand the power of a decentralized service spread out among many individually operated/maintained instances that can cooperate with each other. The benefit of spreading users across multiple instances helps prevent any one of them from exceeding the free/cheap allotment of API calls in the case of meta-search engines like SearXNG or being rate limited like 3rd party YouTube scrapers such as Invidious and Piped.

In the case of YaCy decentralization is also federated, all individual YaCy instances communicate with each other through peer-to-peer technology to act as one big collective web crawler and indexer.

SearXNG

I love SearXNG. I use it every day. So its the engine I want to impress on you the most.

SearX/SearXNG is a free and open source, highly customizable, and self-hostable meta search engine. SearX instances act as a middle man, they query other search engines for you, stripping all their spyware ad crap and never having your connection touch their servers.

Here is a list of all public SearX instances, I personally prefer to use paulgo.io

All SearX instances are configured different to index different engines. If one doesn't seem to give good results try a few others.

Did I mention it has bangs like DuckDuckGo? If you really need Google like for maps and business info just use !!g in the query.

Other Free As In Freedom Search Engines

Here is Marginalia Search a completely novel search engine written and hosted by one dude that aims to prioritize indexing lighter websites little to no JavaScript as these tend to be personal websites and homepages that have poor Search Engine Optimization (SEO) score which means the big search engines won't index them well. If you remember the internet of the early 2000s and want a nostalgia trip this ones for you. Its also open source and self-hostable.

Finally, YaCy is another completely novel search engine that uses peer-to-peer technology to power a big web-crawler which prioritizes indexes based off user queries and feedback. Everyone can download YaCy and devote a bit of their computing power to both run their own local instance and help out a collective search engine. Companies can also download YaCy and use it to index their private intranets.

They have a public instance available through a web portal. To be upfront, YaCy is not a great search engine for what most people usually want, which is quick and relevant information within the first few clicks. But, it is an interesting use of technology and what a true honest-to-god community-operated search engine looks like untainted by SEO scores or corporate money-making shenanigans.

Free As In Freedom, People vs Company Run Services

I personally trust some FOSS loving sysadmin that host social services for free out of altruism, who also accepts hosting donations, whos server is located on the other side of the planet, with my query info over Google/Alphabet any day. I have had several communications with Marginalia over several years now through the gemini protocol and small web, they are more than happy to talk over email. have a human conversation with your search engine provider thats just a knowledgeable every day Joe who genuinely believes in the project and freely dedicates their resources to it. Consider sending some cash their way to help with upkeep if you like the services they provide.

Self-Hosting For Maximum Privacy

Of course you have to trust the service provider with your information, and that their systems are secure and maintained. Trust is a big concern with every engine you use, because while they can promise to not log anything or sell your info for profit, they often provide no way of proving those claims to be true beyond 'just trust me bro'. The one thing I really liked about Kagi was that they went through a public security audit by an outside company that specializes in hacking your system to find vulnerabilities. They got a great result and shared it publically.

The other concern is that there is no way to be sure companies won't just change their policies slowly over time to creep in advertisements and other things they once set out to reject once they lure in a big enough user base and the greed for ever increasing profit margins to appease shareholders starts kicking in. Companies have been shown again and again to employ this slow-boiling-frog practice, beware.

Still, If you are absolutely concerned with privacy and knowledgeable with computers then self hosting FOSS software from your own instance is the best option to maintain control of your data.

Conclusion

I hope this has been informative to those who believe theres only a few options to pick from, and that you find something which works for you. During this difficult time when companies and advertisers are trying their hardest to squeeze us dry and reduce our basic human rights, we need to find ways to push back. To say no to subscriptions and ads and convenient services that don't treat us right. The internet started as something made by everyday people, to connect with each-other and exchange ideas. For fun and whimsy and enjoyment. Lets do our best to keep it that way.

IMO 4k resolution is overkill its way past the optimal between file storage and visual fidelity. Nobody has ever complained about the visual quality of my 720p or 1080p sourced stuff much in the same way most sane people wont notice the difference between FLAC and mp3 on average listening. Bhack in my day we were lucky to get 480p on a square box tv.