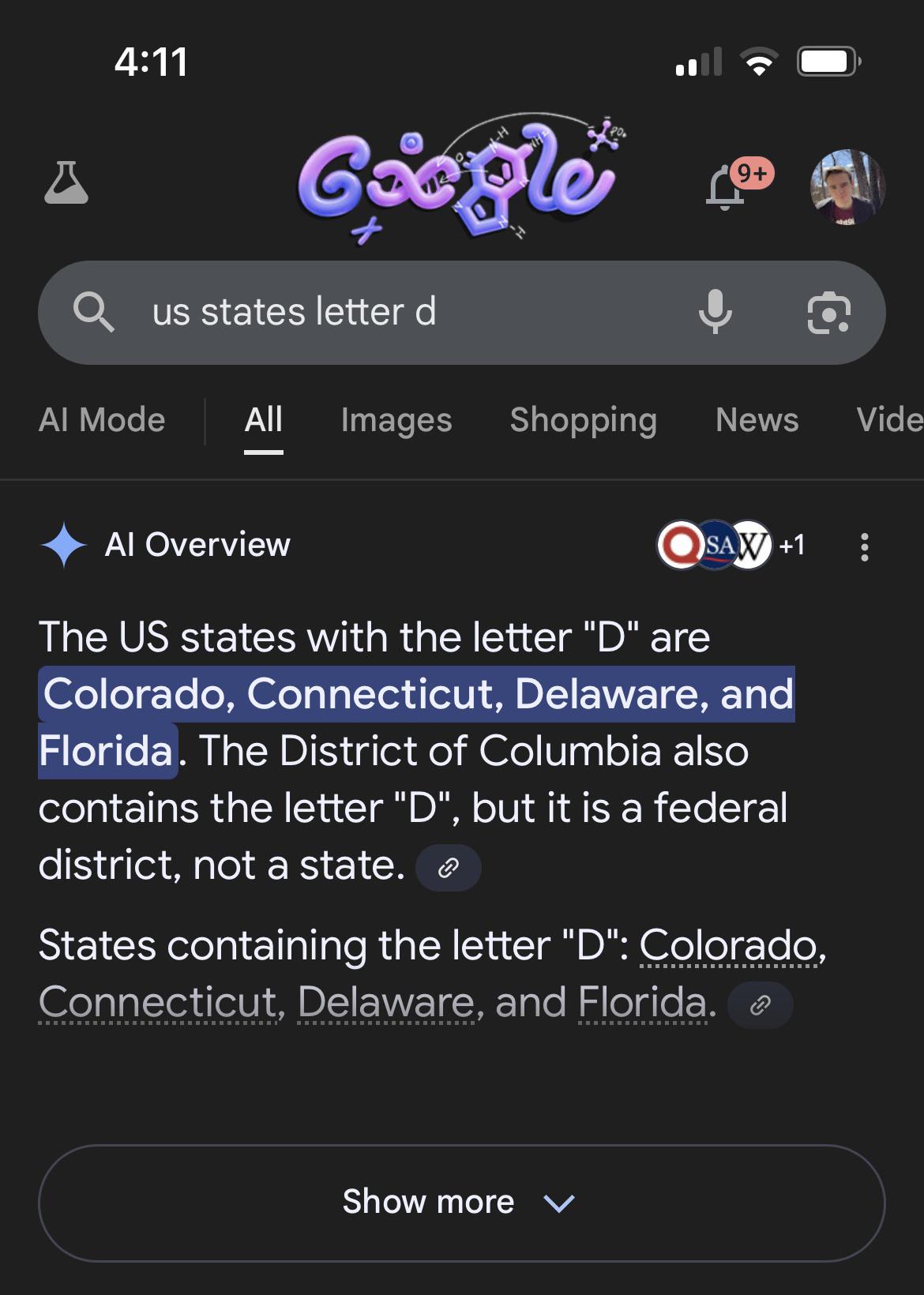

Well, it's almost correct. It's just one letter off. Maybe if we invest millions more it will be right next time.

Or maybe it is just not accurate and never will be....I will not every fully trust AI. I'm sure there are use cases for it, I just don't have any.